Published February 23, 2025 in Industry

Achieving Professional-Quality Translations with AI

Explore how AI and LLMs can achieve professional-quality translations, balancing cost, quality, and time effectively.

Achieving Professional-Quality Translations with AI

In the pre-LLM world, there were two main approaches to product translation:

-

Machine translation with tools like Google Translate or DeepL

- Capable of naively translating sentence A to sentence B

- Fast and inexpensive, but often of limited quality

-

Human translation

- Capable of translating sentence A to sentence B while considering context:

- The specific context of the product

- The use of industry-specific jargon

- Consistency with existing translations

- Ensures high quality but requires more time and higher costs

- Capable of translating sentence A to sentence B while considering context:

Of these two solutions, the second clearly provides the best translation quality for a product, provided that translators have access to the necessary context.

But having access to this context is not a trivial task:

- Either translators know the product perfectly and have in mind the existing terminology, the meaning of each feature, the localisation of the text in the product, user journeys, an excellent knowledge of the industry, etc.

- Or this context is formally specified with documentation, screenshots, glossaries (specifying industry terms and their translations in each language), and translators must refer to them meticulously.

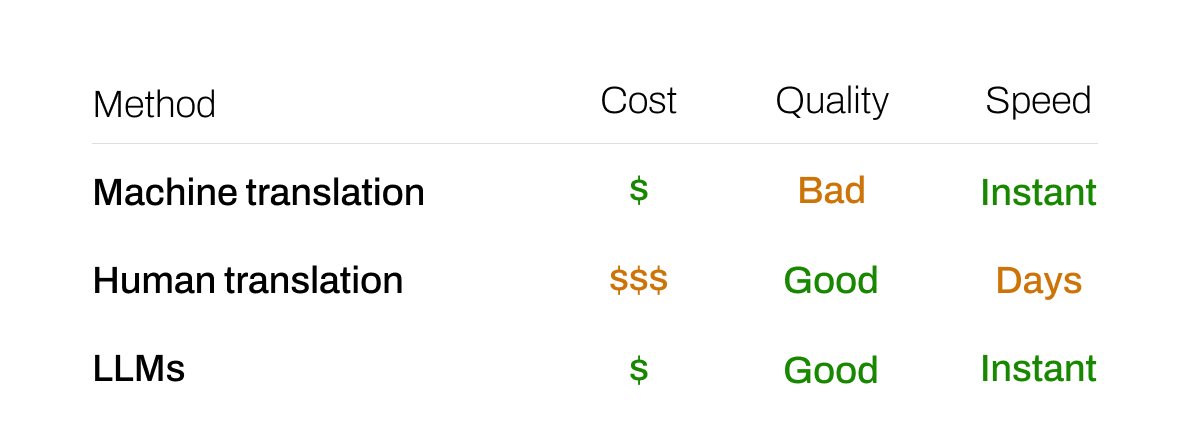

In the end, the assessment was simple: machine translation is inexpensive and fast, but of mediocre quality, while human translation offer better quality, but at a high cost and with a slower translation process.

The AI and LLMs Revolution

Since the emergence of LLMs (Large Language Models), translation tools have embraced this revolution, like Prismy, with the hope of significantly simplifying this process.

If we compare the three approaches according to the cost/quality/time trifecta:

Translations methods comparison

Translations methods comparison

While the cost and time factors are relatively uncontroversial, the quality factor deserves a more in-depth analysis.

How Prismy Ensures Translation Quality

Can I trust your AI to translate my product blindly?

This is a question we're frequently asked.

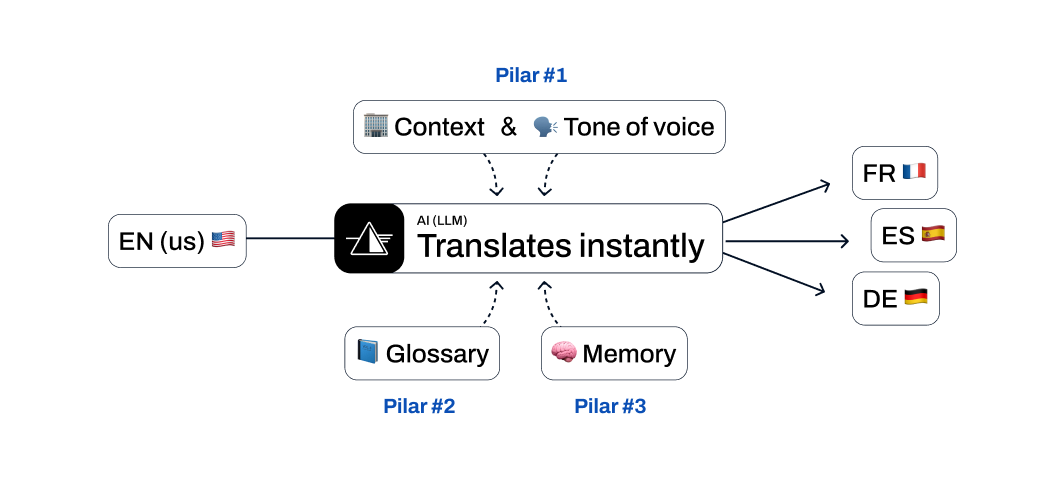

As we've seen previously, it's the consideration of context that allows translators to provide quality translation. The key therefore lies in the ability to take into account this context, and that's precisely where LLMs excel, being capable of analyzing numerous input data to adjust their output.

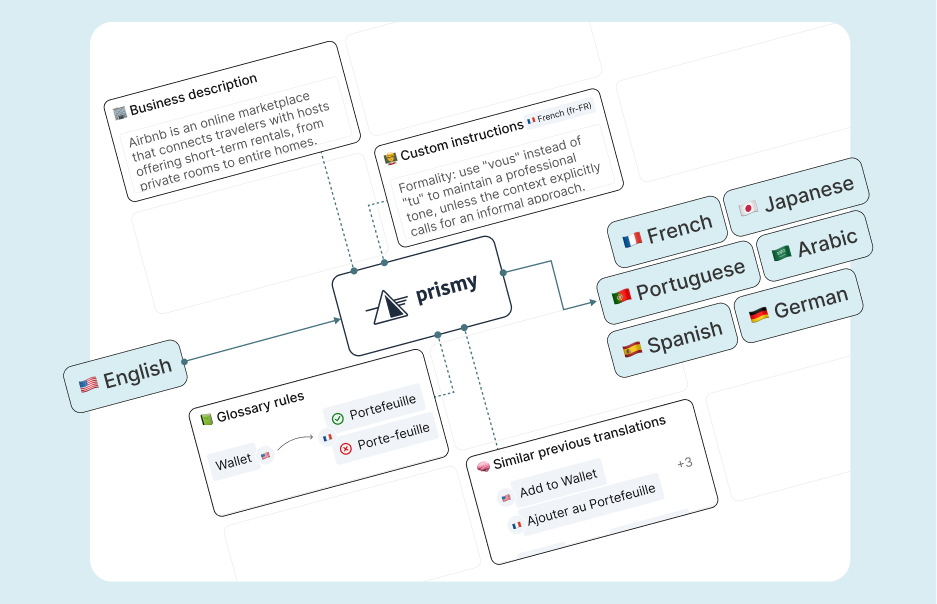

Prismy relies on three pillars to ensure translation quality:

Translations quality pilars

Translations quality pilars

Pilar #1: Defining Context and Tone of Voice

The first step is to explain the context to the LLM, starting from the most general (the industry) to the most specific (the location of the wording in the product).

Prismy allows you to globally define the industry, the product, and its utility. Thanks to this, LLMs are already able to use the right jargon, and the translation quality is considerably improved.

Then, when Prismy translates product wordings, it's sometimes necessary to understand which part of the product they will be used in. For this, Prismy offers the possibility to define instructions by product section. Additionally, Prismy is able to translate texts feature by feature, based on development branches and leveraging the context attached to pull requests and other metadata.

Finally, Prismy also allows you to define the tone of voice to use by language and by product section.

Pilar #2: An exhaustive and up-to-date glossary

Each company has its own jargon, industry terms for which they want to maintain consistency and potentially impose specific translations.

For this, companies often use glossaries to define these terms and their translations.

Where translators could still keep in mind terms not formalized in a glossary, this approach no longer works with AI. It becomes essential to formally and exhaustively document all specific terms. This task may seem tedious, but Prismy allows you to automatically generate and maintain a glossary for this purpose. This is a super interesting subject in itself, which I will explain in more detail in a dedicated article.

Pilar #3: Translation memory

The last pillar is to ensure consistency by relying on existing translations. Our translations gain experience and quality thanks to translation history. Thus, if a word or portion of a sentence has already been translated elsewhere in the product, Prismy is able to instruct the LLM to base itself on these existing translations and ensure global consistency.

Is this the miracle solution?

Thanks to these three pillars, LLMs are revolutionizing product translation by ensuring high translation quality, while offering speed and low cost.

Is this enough? Our experience at Prismy reveals that with optimal configuration, translations reach an excellent level of quality, but focused human supervision remains valuable to achieve excellence. This is why Prismy also allows review workflows and works to prioritize the most doubtful translations in order to direct relevant reviews to only a few wordings.

With this strategy, it's now possible to have an automated process with good initial configuration and well-targeted review, allowing the system to learn in real time!

Don't miss our industry insights!

Get the latest insights on localization, AI translations, and product updates delivered to your inbox.

No spam, unsubscribe at any time. We respect your privacy.